Innovation

Artificial Intelligence

Generative AI is not just a nice-to-have, but serves as the basis for future business success. Mindbreeze InSpire provides the facts for the secure use of Large Language Models (LLM) in the enterprise.

Accelerated knowledge gain

Mindbreeze InSpire uses various AI methods to reduce the time and effort required to gain insights from information.

Personalized and contextualized results

Through artificial intelligence, such as deep learning and large language models, Mindbreeze InSpire interacts with employees and customers in a highly personalized manner.

Process automation

Mindbreeze InSpire streamlines, simplifies, and automates time-consuming business processes for your employees.

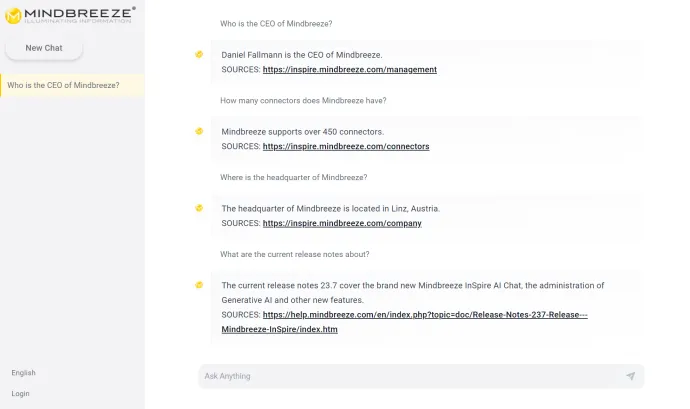

Quick answers with the Mindbreeze InSpire AI Chat

Open standards

Free choice of LLMs

Whether GPT from OpenAI, Llama2 from Meta, or models from Huggingface, you can combine Mindbreeze InSpire with the Large Language Model of your choice and generate answers and insights from internal company data, remarkably similar to humans. Mindbreeze trusts and supports open standards such as ONNX (Open Neural Network Exchange).

Sources

Comprehensible answers from LLMs

Ensure data protection in your company easily. You choose which data your LLM uses to generate content and avoid hallucinations - i.e. answers that sound convincing but may be incorrect. Displayed sources allow you to check fact-based answers and guarantee precision at all times.

Optimization

Continuous improvement through learning

Benefit from the ongoing refinement and optimization of results based on experience from past human interactions. Mindbreeze InSpire learns from you and adapts more and more to your needs.

The queries and behavior of your employees are not transmitted to and evaluated by third-party providers.

Method

Relevance Model

Using a relevance model based on machine learning and neural networks, Mindbreeze InSpire analyzes user behavior (previous searches, interactions with hits) to predict which content is relevant.

Generative AI (GenAI) and tools like ChatGPT have taken the world by storm. However, in order for these technologies to be used professionally in companies, numerous challenges must be overcome - for example, data hallucination, lack of data security, authorizations, critical intellectual property issues, expensive training costs, and technical implementation with confidential company data. Mindbreeze InSpire solves these challenges and forms the ideal basis for making Generative AI a fit for corporate use.

Jakob Praher, CTO Mindbreeze

Generative AI Demo

See for yourself

See how generative AI and natural language question answering (NLQA) is made possible in every Mindbreeze InSpire Insight App through Language Prompt Engineering technology.

Natural Language Question Answering

Mindbreeze InSpire NLQA Use Case

Retrieval Augmented Generation

Mindbreeze InSpire RAG Use Case

Frequently asked questions

Contact us

AI is no longer a nice-to-have!

Let's talk.

Our team will be happy to answer your questions about Mindbreeze InSpire. In this exclusive white paper, you will learn how Large Language Models (LLMs) can help you overcome data and knowledge silos and how to safely use generative AI for critical business decisions.

You will receive the download link to the white paper by e-mail after completing the form.