What's new? We present the Mindbreeze InSpire AI Chat

In the following blog post, we present the Mindbreeze InSpire AI Chat, the most important innovation of the Mindbreeze InSpire Release 23.7. The Mindbreeze InSpire AI Chat is now available locally, as SaaS and in the cloud for Mindbreeze customers.

Mindbreeze InSpire AI Chat

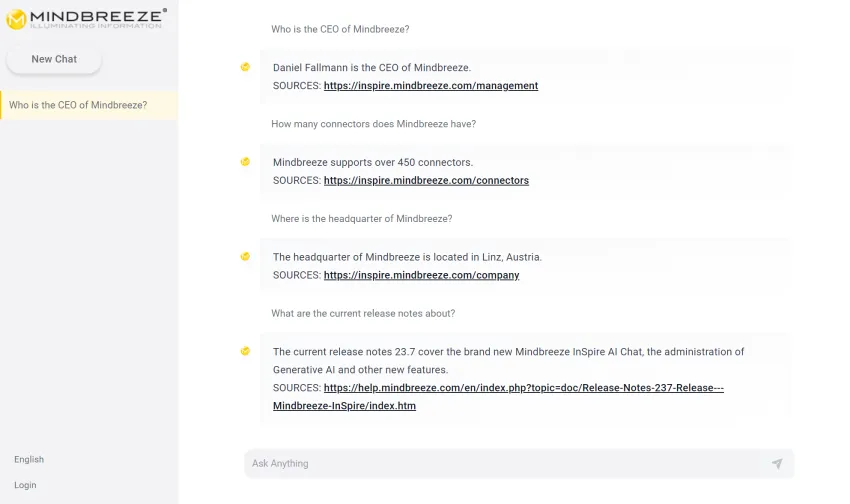

With the Mindbreeze InSpire 23.7 release, Mindbreeze InSpire offers a new way of gathering information. Similar to ChatGPT, the Mindbreeze InSpire AI Chat summarizes relevant facts in natural language. Users receive additional context through overlaid source information and, therefore, can understand answers quickly and easily.

How does Mindbreeze InSpire AI Chat work?

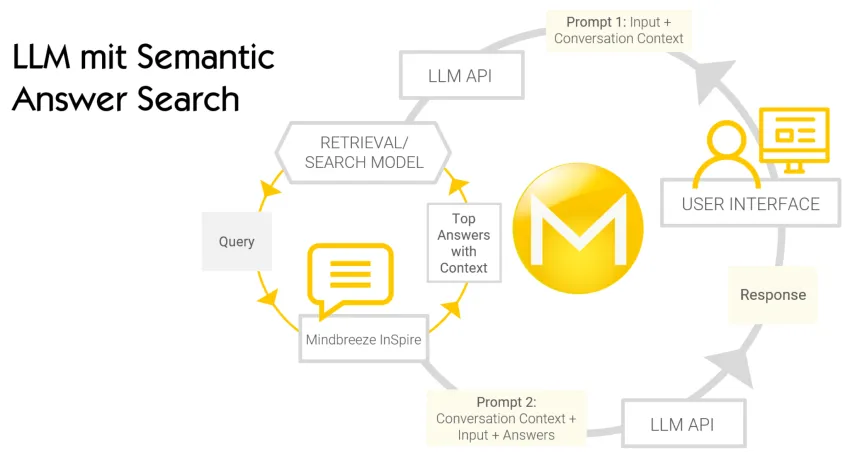

Generative AI forms the technological basis for Mindbreeze InSpire AI Chat. This collects the necessary facts from Mindbreeze InSpire using Retrieval Augmented Generation (RAG). Accounting for specific user access rights, a Large Language Model (LLM) processes the collected facts and generates relevant answers from the information. In the graphic below, you can see what this process looks like.

Broad support from various connectors ensure that the answers are up-to-date. An individual information landscape, mapped with the help of semantic connections, enriches existing information with additional context. This provides users with more comprehensive answers, while data security is guaranteed at all times, thanks to various authentication options.

Creation and management of AI chat pipelines

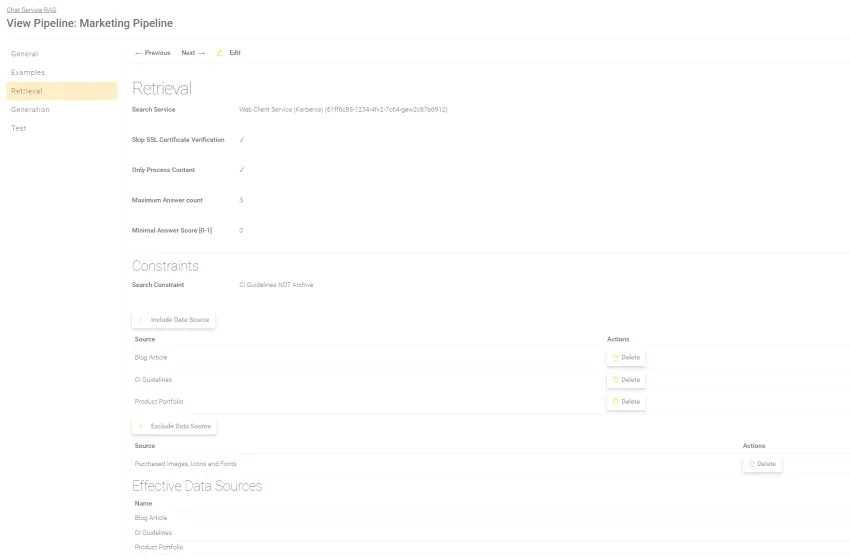

Data sources configured in the Mindbreeze Search Client can be seamlessly transferred and used immediately in Mindbreeze InSpire AI Chat. With just a few clicks, administrators can create individual models and pipelines and control them precisely with the help of restrictions and fine-tuning. It is also possible to add or exclude data sources.

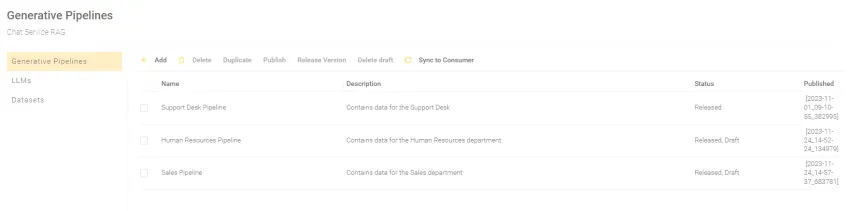

You can specialize models for different departments, as shown in the screenshot below. Additionally, versioning makes it possible to reuse older models.

This allows administrators to create customized models for different use cases efficiently.

You can find detailed information on our innovations and features in our release notes.

Contact our experts for further information.

Latest Blogs

Catapult Customer Service With Internal AI Chat Systems

With the proliferation of artificial intelligence (AI) technologies, internal AI chat systems have emerged as powerful tools for enhancing customer service efficiency.

Discovering Business Insights with Vector Search and Mindbreeze InSpire

Vector search is changing the way we extract meaning and value from data. Coupled with Mindbreeze InSpire’s 360-degree view capability, vector search empowers organizations to uncover deeper insights from within every department of their organization.